Data Engineer Things Newsletter #24 (Oct 2025)

Netflix's journey from simple dashboard to trillion-row scale. Are platforms really simplifying our lives? Community insights on cutting through architectural complexity.

Hey folks,

I’m writing to you from Cincinnati, where fall is settling in and the leaves are starting to show their colors. The crisp air, football weekends, and streets lined with shades of red and gold make this one of my favorite times of the year. There’s something about the change of seasons that feels a lot like data engineering—constant shifts, small adjustments, and moments of beauty when everything comes together.

Much like the rhythm of fall, data engineering is about balance—keeping systems reliable while adapting to change. Whether it’s new tools, evolving patterns, or fresh ideas from the community, there’s always something to learn and something to improve.

This edition brings a mix of ideas and resources from across our community. Hope you find something here that sparks your curiosity as you sip on your favorite fall drink. 🍂

Happy reading, and thanks for being here.

- Sukanya

📰 Data Pulse

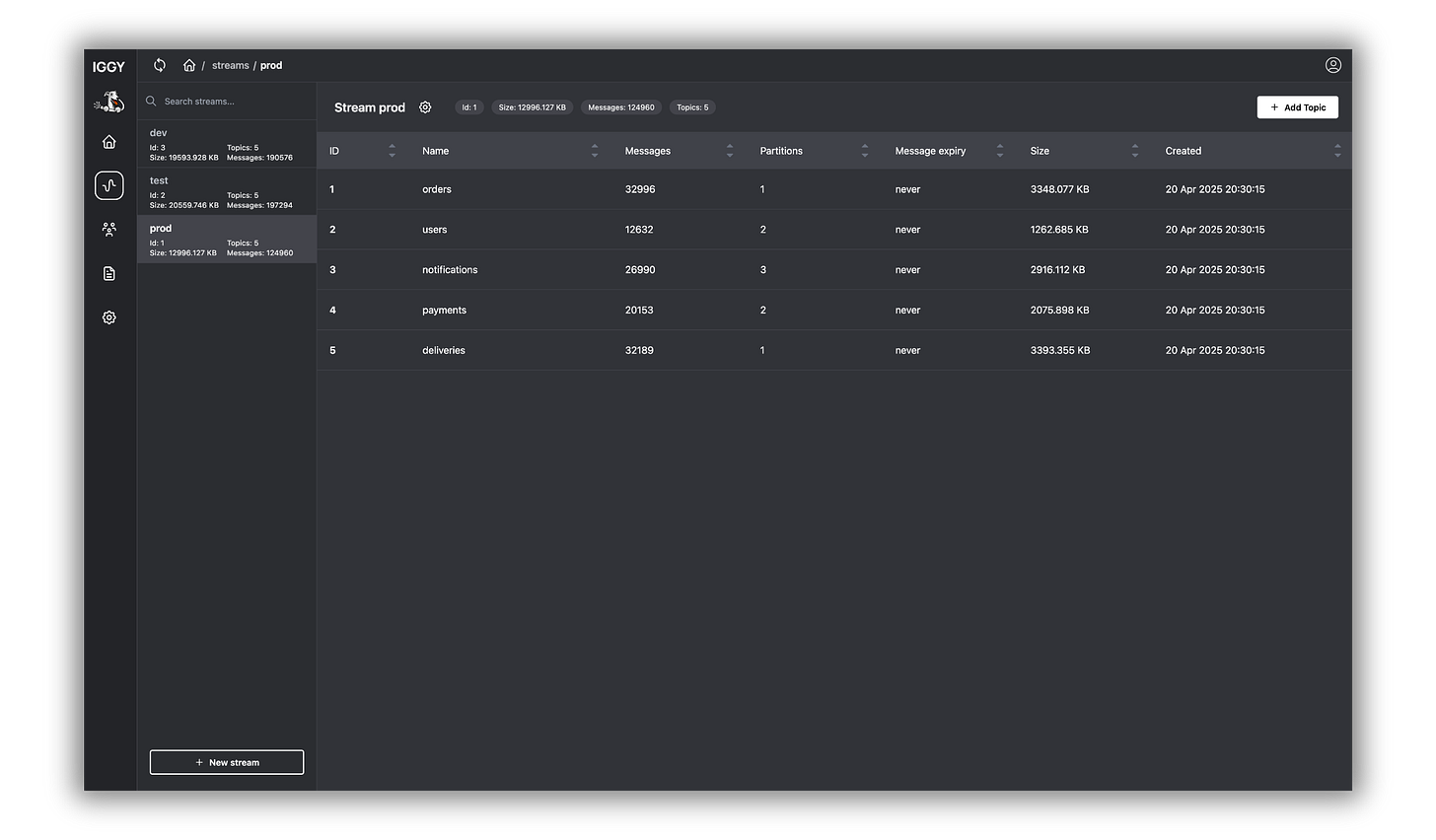

Stream Processing: Open-source project Apache Iggy is a persistent message streaming platform written in Rust, supporting QUIC, TCP (custom binary specification), and HTTP (regular REST API) transport protocols, capable of processing millions of messages per second at ultra-low latency.

Apache Iggy web UI (Source) Trends Report: InfoQ released its annual AI, ML & Data Engineering Trends Report. Physical AI and multi-modal models will revolutionize data processing pipelines by enabling real-time analysis of diverse data types in unified workflows. MCP standardization means easier integration between different AI tools and data systems, while commoditized RAG enables rapid deployment of knowledge-based applications. The evolution toward agentic AI directly impacts Data Engineering by automating complex ETL processes, data quality monitoring, and infrastructure management.

AI: Introducing Claude Sonnet 4.5! Anthropic released Claude Sonnet 4.5, achieving 77.2% on SWE-bench. Key updates include checkpoints in Claude Code, VS Code extension, and the new Claude Agent SDK. For DEs, the 30+ hour task focus enables end-to-end pipeline development without losing context, while the Agent SDK allows building sophisticated data processing agents for ETL workflows, monitoring, and error recovery.

AI: Open-source project Genkit is an open-source framework for building full-stack AI-powered applications, built and used in production by Google’s Firebase. It provides SDKs for multiple programming languages with varying levels of stability, including JavaScript/TypeScript, Go and Python.

import { genkit } from ‘genkit’;

import { googleAI } from ‘@genkit-ai/google-genai’;

const ai = genkit({ plugins: [googleAI()] });

const { text } = await ai.generate({

model: googleAI.model(’gemini-2.5-flash’),

prompt: ‘What is Data Engineer Things?’

});Conferences: Big Data LDN (London) 2025 took place from September 24–25 at Olympia London, bringing together thousands of data, analytics, and AI specialists to discuss building data-driven businesses. Watch out for the 2025 playlist on the official YouTube channel.

🔖 Featured Read

Stop That Platform Hype for Good

Author: Bernd Wessely

Every vendor claims their platform is the answer to enterprise IT chaos—but are platforms really simplifying our lives, or just adding another layer of complexity?

This article cuts through the hype, explains why most platforms fail to deliver on their promise, and challenges the idea that “platform engineering” is the silver bullet.

Highlights from the article:

Platform ≠ Productivity: Platforms often become another layer of indirection instead of solving core problems.

One-size-fits-none: What works for one company’s scale, culture, and team may be a terrible fit for another.

Illusion of simplification: Instead of making life easier, platforms can hide complexity until it explodes later.

Focus on fundamentals: Teams thrive when they improve automation, monitoring, and developer experience—not when they chase buzzwords.

Reality check: Engineering isn’t about buying platforms; it’s about building reliable systems that solve your problems.

👉🏼 Read the full article HERE.

📚 Articles of the Month

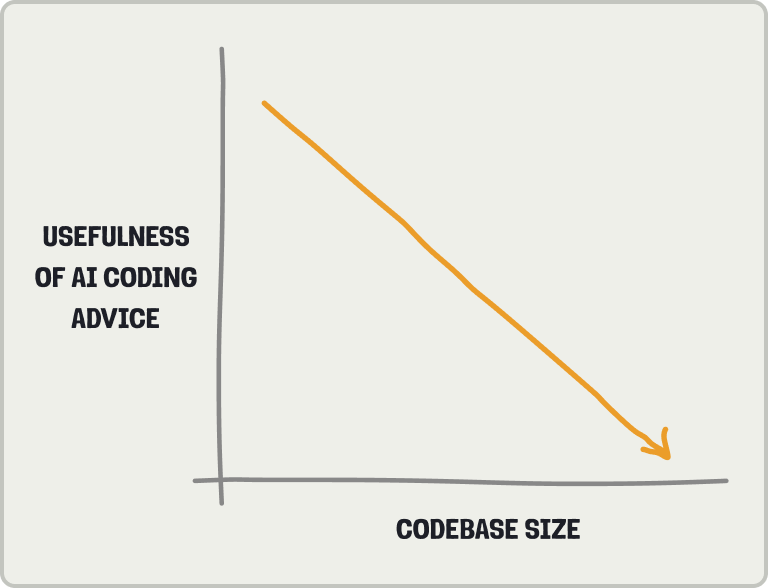

Avoid these AI coding mistakes: PostHog engineers break down what actually works (and doesn’t) when coding with AI in production environments. Key insight: in large codebases (think 8,984 files), you need serious guardrails. AI excels at autocomplete, fixing tests, and research, but struggles with unfamiliar languages and writing quality tests from scratch. Success requires treating it like a skill you develop through experimentation, understanding its limitations, and staying hands-on because you’re ultimately responsible for what ships.

Usefulness of AI coding advice (Source) Scaling Muse: How Netflix Powers Data-Driven Creative Insights at Trillion-Row Scale: Learn how Netflix scaled Muse to trillion-row datasets, combining Druid, Iceberg, and probabilistic (HyperLogLog) sketches to balance speed with accuracy. A masterclass in high-performance data engineering.

Going direct is all about empowering teams to communicate openly across departments and levels without following org chart hierarchies, enabling faster decision-making, increased collaboration, and reduced bottlenecks. For Data Engineers, direct communication is crucial for resolving data pipeline issues quickly during incidents, enabling cross-team data integration projects, and allowing autonomous tactical decisions while escalating only high-risk architectural changes - helping data teams move faster in today’s leaner organizations.

Note To My Younger Self: In this reflective piece, a Google engineering leader shares wisdom he wishes he had known earlier, from avoiding the chase for validation to embracing uncertainty. A valuable read for data engineers thinking long-term about their careers.

(✍️ Interested in publishing articles on DET on Medium? Read submission guidelines here.)

🎨 Community Spotlight

Please introduce yourself briefly to the DET community and share how your journey has shaped your unique perspective on data systems.

Hi Everyone! I’m Anandaganesh Balakrishnan, a Data Engineering leader with over 18 years of experience designing and modernizing enterprise data infrastructure across the banking, trading, real estate investment, and utilities sectors. I’ve led major transformation projects at ING Bank, Susquehanna International Group (SIG), and Roc360, focusing on building data systems that are faster, more scalable, and efficient. Working across Asia, Europe, and North America has been a enriching journey that’s taught me to adapt to different cultures, collaborate with diverse teams, and see how great ideas can lead into large-scale innovation.

What was the most challenging “merge conflict” you had to resolve when transitioning from traditional systems to cloud-native architectures?

Architecting modern, scalable cloud-native data platforms across organizations meant redoing the data flow and the underlying technology, of course, but it also meant getting teams, processes, and everyone’s way of thinking on board with new tools. Getting past those disagreements taught me that updating things isn’t just about the tech itself; it’s about guiding changes in how people work, the steps they follow, and the tools they use.

What’s your framework for cutting through architectural complexity when modernizing enterprise data platforms, and what’s one common mistake you see organizations make during these transformations?

When modernizing enterprise data platforms, the key to managing complexity is keeping strategy (business/data) and execution in sync. It starts with having a clear vision of what the business wants to achieve, whether that’s faster insights, lower costs, or stronger compliance, and making sure every architectural choice supports those goals. On the ground, this means building modular, flexible systems that can adapt quickly today while scaling for tomorrow. A common pitfall I see is teams rushing into tool choices or cloud migrations without that alignment, which often leads to fragmented systems and unnecessary technical debt. Real transformation happens when strategy guides every architectural decision and day-to-day actions work together to bring that vision to life.

As someone who mentors others and has led strategic programs, what’s the most important skill data professionals need to develop to transition from IC to enterprise architect, and how should they build credibility with C-level executives?

The most important skill for data professionals looking to grow into enterprise architects is strategic thinking, seeing how every technical decision connects to the bigger business picture is important. It is about understanding how data drives revenue, manages risk, and supports the company’s overall objectives. To earn credibility with C-level leaders, speak the language of business outcomes rather than outputs, show how your architectural choices drive measurable value, save costs, or create a competitive edge. When you align technology efforts with what really matters to the business, you help bridge the gap between engineering and leadership. That alignment builds trust, strengthens collaboration, and shows that technology is a true partner in driving the company’s success.

Your career has involved positions as a project leader, database developer, data engineer, technical editor, and more. With your broad perspective on the data job landscape, what are the key roles for data professionals in the future, and what emerging technologies should data professionals be positioning themselves for?

Looking ahead, the most impactful roles in data will center on data architecture, agentic data stacks, platform engineering, and AI-ready infrastructure. As organizations move toward intelligent, automated systems, data professionals will need to design sustainable data platforms, infrastructures, and architectures that enable self-optimizing, adaptive data pipelines, which I refer to as agentic data engineering.

On your LinkedIn profile, you mention your favorite books: Atomic Habits, The Black Swan, and Money - Master the Game. For data engineers, what are the most impactful lessons you’ve learned from these books? Feel free to share one lesson from each.

Atomic Habits instilled in me the discipline to stay focused and execute projects with consistency and efficiency. The Black Swan, my favorite among them, deepened my understanding of how rare, unpredictable events, like today’s AI disruption, can reshape entire industries, reinforcing the need to unlearn and relearn continuously. Money - Master the Game taught me that sharing knowledge and empowering others not only creates collective progress but also leads to personal and professional growth.

What advice would you give to career starters who want to get into the world of data engineering? What should they focus on first?

If you’re beginning as a data engineer, get the basics down first before you mess around with fancy tools. Get good with Python, SQL, and distributed computing, these skills are super important. Also, learn how data helps businesses make decisions and makes money for the company.

💡 DE Tip of the Month

Before adding new orchestration or scheduling tools, explore the capabilities of what you already use. For example, Databricks Jobs, Airflow, and Prefect all support retries, alerting, and conditional workflows out of the box, often enough for most pipelines.

If you outgrow these basics, you can consider specialized workflow enhancements:

Dagster: Strong focus on data-aware orchestration and observability

Argo Workflows: Kubernetes-native workflows for large-scale automation

Mage: Lightweight and friendly alternative for quick pipeline building

Begin with what’s built-in; you’ll be surprised how much complexity it saves.

Let us know what you like the most in the newsletter. See you next time!

Cheers,

Sukanya and Ananda

ℹ️ About Data Engineer Things

Data Engineer Things (DET) is a global community built by data engineers for data engineers. Subscribe to the newsletter and follow us on LinkedIn to gain access to exclusive learning resources and networking opportunities, including articles, webinars, meetups, conferences, mentorship, and much more.